This week I had to work with a customer who used F5 to load balance their vRA Distributed environment, and it has proven that just a small change from recommended configuration can break your full setup. For this reason, I have decided to document the full F5 load balancer configuration on this page. This post can still be helpfull to get you started with other load balancers as well.

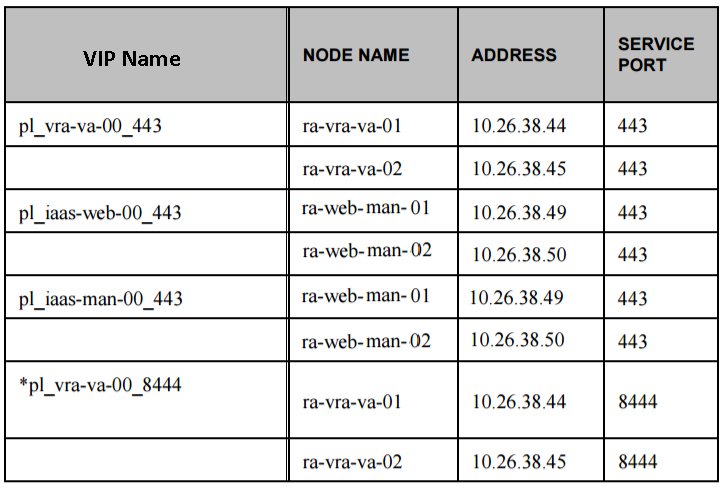

As medium distributed install seems to be the most common between customers, I will use it as the base of my post in here, but I will cover the differences in large distributed install as well. Let’s start at first with the list of our setup components. As you will notice in the below table that the manager service and Web are both sharing the same servers unlike large architecture installation where Web and Manager Service are separated into different servers. That has been said, you will still need to setup a different VIP one for Manager Service and one for Web in both medium and large distributed setups. The main difference is that in a medium setup both VIPs will point up to the same servers. You will need as well to setup another VIP for the vRA Appliances. At last, you don’t need to setup a VIP for DEM Workers, DEM Orchestrators, Proxy Agents, Embedded vCO, Embedded vPostgres Databse, or IDM. I can’t count the time I have seen it where a load balancer was setup for components that does not require them, so here is your hint.

While many customers try to have the load balancers configured and used during the initial installation of a vRA distributed environment, I would highly recommend against that approach as any mistake with your load balancer configuration can make your installation of vRA fail and it will be much harder to troubleshoot. The recommend approach will be to initially just point the VIP Name A record to the first host for each compoenent, then after installation is completed and tested to point your VIP DNS A record to the VIP on your load balancer. In our example, our initial DNS A records will be as follow:

plvrava00 ==> 10.26.38.44

pliaasweb00 ==> 10.26.38.49

pliaasman00 ==> 10.26.38.49

After the installation is completed and setup has been tested, you will point your VIP names in DNS to your loadbalancer VIP IPs instead of the nodes IPs. Before you point your DNS to your VIP IPs make sure you follow the below steps to configure your VIPs on your F5.

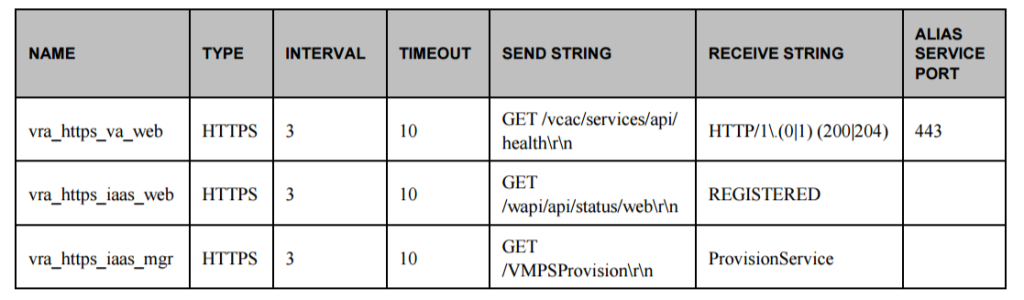

Now your vRA setup is up and running and ready to be load balanced, the first thing you will need to configure on your load balancer is the Health Monitor. The below table covers vRealize Automation 7.x health monitor URLs. Please note these are different than the ones used previously in vRA 6.x.

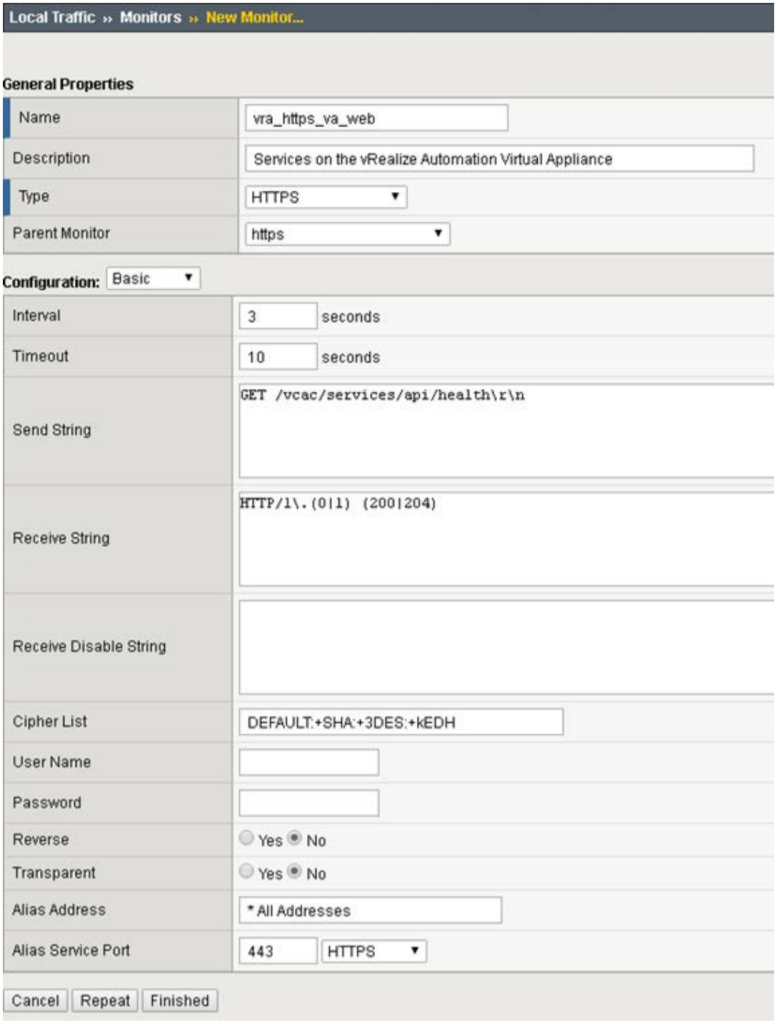

The below screenshot demonstrate how to configure these health monitor in your F5 load balancer.

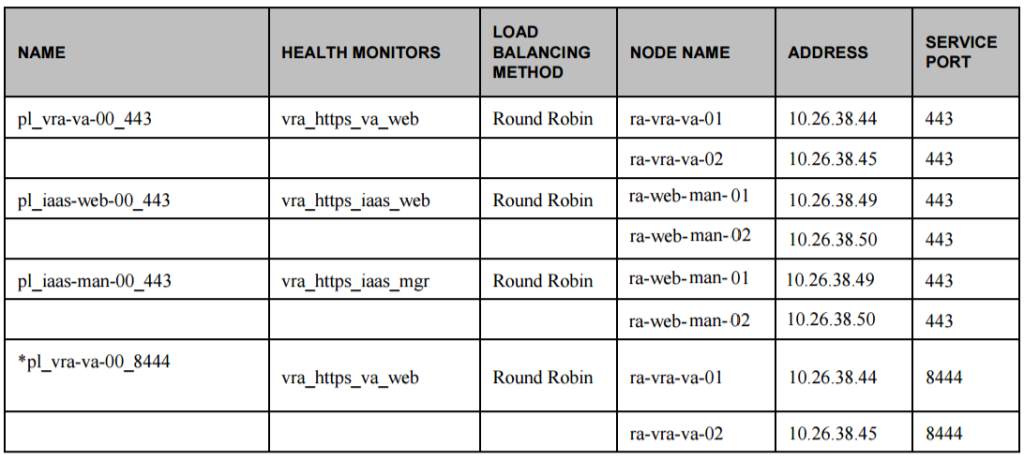

Alright now that you have setup your health monitors for vRA Appliance, Web, and Manager service, the next step is to configure your Server Pools as shown in the below table. Please note port 8444 is optional and is only used for remote console connection.

Your Server Pools completed configuration should look like shown in the below screenshot.

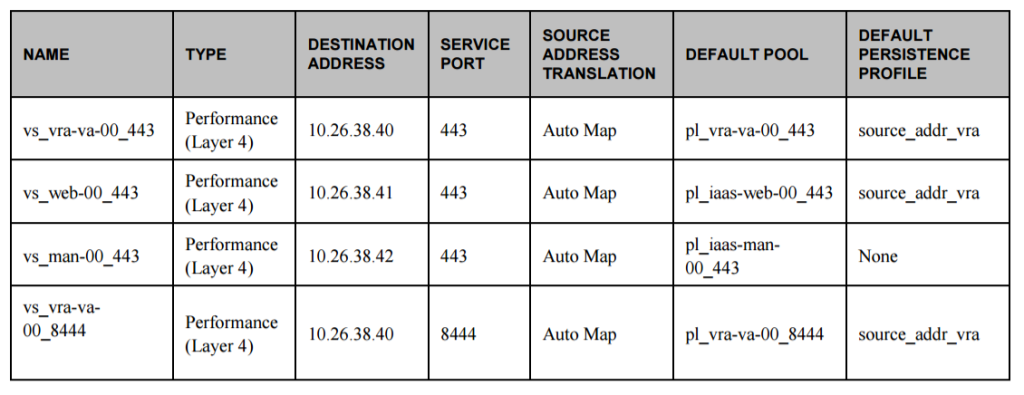

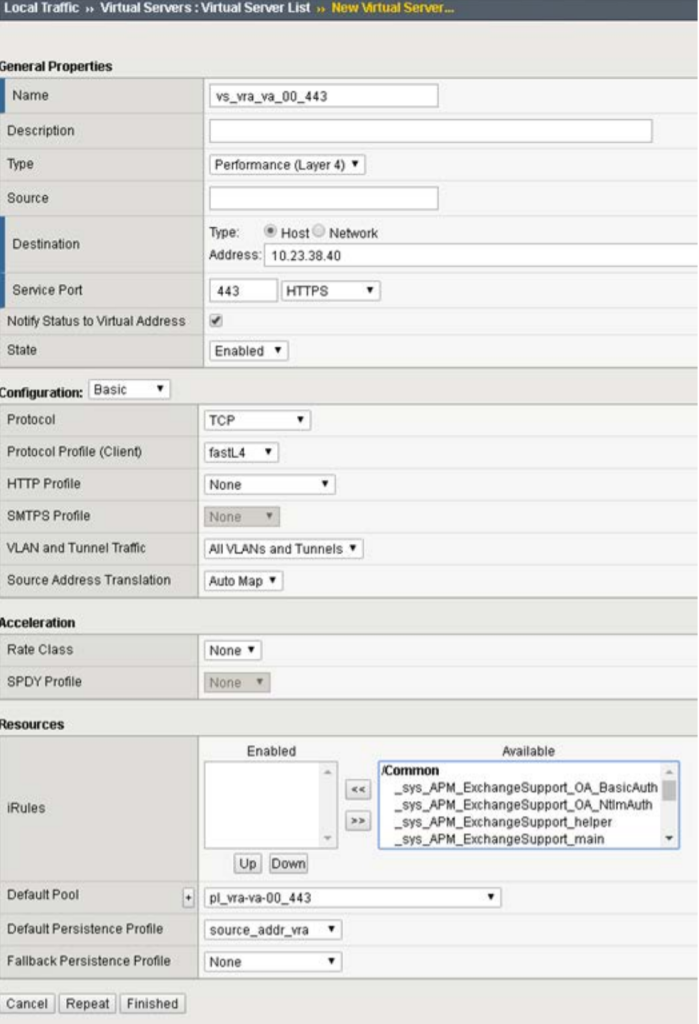

Now that your Server Pools are ready, it’s time to configure your Virtual Servers in F5. The below table and screenshot demonstrate the required configuration for vRealize Automation 7.x.

This should get you set and ready to enjoy a distributed vRA environment that is well load balanced after your F5 load balancer. Hope you find the informaiton in this post helpfull and as usual leave any questions in the comments area.

3 responses to “vRealize Automation 7.x F5 Load balancer Configuration”

[…] vRealize Automation 7.x F5 Load balancer Configuration […]

Hi This is an outstanding post and very valuable.

It would however be great if you could post the settings for the source_addr_vra persistence profile.

I assume it closely replicates the base source_addr profile but would like to see what setting you put in here.

Only other question is in the 7.3 reference architecture here:

https://docs.vmware.com/en/vRealize-Automation/7.3/vrealize-automation-73-reference-architecture.pdf

It specifically says

“You can use a load balancer to manage failover for the Manager Service, but do not use a load-balancing algorithm, because only one Manager Service is active at a time. Also, do not use session affinity when managing failover with a load balancer.”

I notice you have not applied a consistency group for the persistence profile so session affinity will be of, but you have set it to round robin, is that because you can’t not select a load-balancing algorithm, or is there another reason.

Hi Anthbro,

My article was written on the days of vRA 7.2. The ability to allow the Manager Service to be active on both nodes is a new capability in 7.3 nd beyond.

Cheers,

Eiad