The demand for across site VMotion and High Availability/Business continuity has shown an increase in the past year or so. I have started to see more customers implementing/considering such solution. I remember when VMware added support for vSphere stretched clusters about a year back, where only EMC VPLEX was on the Hardware Compatibility list. Actually EMC has done a massive amount of work with VMware to verify the solution and make sure it pickup. Guess what, it did not take long for other storage vendors to see how quickly vSphere Stretched Cluster is picking up & now both NetApp & IBM have a configuration that is supported in a stretched cluster configuration.

In this post I will be discussing many of the best practices that you want to follow when building a vSphere Stretched Cluster. While many of these recommendation are generic and apply to most vSphere 5.1 Stretched Clusters, I have only validated them in an EMC VPLEX environment. Please make sure you check with your storage vendor before applying those recommendations to a different environment.

Before starting to implement a vSphere Stretched Cluster, you would want to understand how it work and what enable it. What you are trying to achieve in here is to enable VMware HA & VMware VMotion to work across sites. As you already know both of these features require a shared storage between all hosts within a single vSphere cluster in order to operate(I know that VMotion does not require this in vSphere 5.1 any more, but I will explain why you still want this later on in this article. For now assume its required).

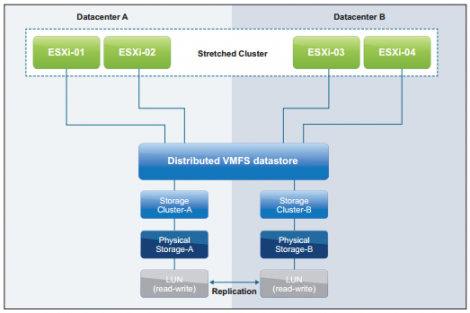

In traditional setup each site would have its own vSphere Clusters that sees LUNs that is localized for that site. In such setup you would not be able to VMotion VMs or apply VMware HA across sites. You might think I could connect hosts across sites to the same storage and build a single vSphere cluster across sites that way. While its possible to do it this way, you will create across sites single point of failure which is that single storage array you are connecting all hosts to. This particular problem is what VPLEX was originally created to address. EMC VPLEX can create a virtual distributed LUN that look for ESXi hosts on both site as a single LUN, where in the back-end that LUN is actually replicated across two different LUNS living on different storage boxes. Those back-end storages can be a normal EMC storage (Ex: VMAX,DMX, VNX) or any non EMC storage(Ex: IBM DS8000). It really does not matter what storage you will use at the back-end, as the ESXi hosts are not aware of it as it will be masked/virtualized by the EMC VPLEX(or equivalent) & presented to the ESXi hosts as a Distributed VMFS Datastore that span both sites. This in it self has resolved the largest challenge of building vSphere stretched cluster. The diagram below should give you a good illustration of how a stretched VMFS Datastore look like & How EMC VPLEX work.

Alright before going into best practices, let me clarify why I said you should assume shared storage is required for VMotion in this scenario although vSphere 5.1 has the capability to do VMotion without a shared storage. The reason behind that is that Enhanced VMotion is kinda carrying out a Storage VMotion in the background where all that data will have to be copied across LUNs which can over load your WAN links and a much busier operation than you normally want in such setup. Carrying a normal VMotion using a shared distributed VMFS is a much better and friendlier operation. I am actually not sure if enhanced VMotion was tested in a stretched cluster setup yet and if its supported in that manner or not. Maybe some one can shed some light on this in the comment area. Either way I would not recommend it & with EMC VPLEX in place you really don’t need it.

When decided to build EMC VPLEX vSphere 5.1 Stretched Cluster, one of the first decisions you will need to take if you should use a Uniform vs Nonuniform VMware vMSC. Before you can take such a decision or debates the advantages/disadvantages of each you will need to understand each configuration & how are they setup. A good post showing the differences between them in details can be found at: http://www.yellow-bricks.com/2012/11/13/vsphere-metro-storage-cluster-uniform-vs-non-uniform/ . Below is a short description of the Uniform & Non uniform VMware vMSC where if you require more details then please refer to the earlier pointed to article.

• Uniform host access configuration – When ESXi hosts from both sites are all connected to a storage node in the storage cluster across all sites. Paths presented to ESXi hosts are stretched across distance. The below image demonstrate the connections in a Uniform VMware vMSC configuration.

• Nonuniform host access configuration – ESXi hosts in each site are connected only to storage node(s) in the same site. Paths presented to ESXi hosts from storage nodes are limited to the local site. The below image demonstrate the connections in a Non-Uniform VMware vMSC configuration.

Alright now that you have and idea about how the host connectivity is done differently in Uniform & Non uniform VMware vMSC. Many of you by just looking at the diagrams above will figure out that in a Uniform VMware vMSC you will need to have a very fast connectivity between the two sites with very low latency. Actually if you are to use a Uniform configuration, the maximum synchronous storage replication link latency allowed/supported will drop from 5 milliseconds RTT(in a non uniform configuration) to 1 milliseconds RTT (in a uniform configuration). That means before you get excited about implementing a uniform configuration you will need to ensure that your synchronous storage replication link can deliver a lower latency than 1 millisecond RTT as well ensure you have a large enough pipe for hosts to run VMs on LUNs on the other site as in a uniform configuration each host has access to LUNs on both sides and can run the VMs from storage on either side.

While Uniform VMware vMSC require larger replication links and lower latency between sites, it has the big advantage if the full VPLEX setup at a particular site fail the ESXi hosts will continue operating without interruption from the other site storage. On the other hand such a situation in a non-uniform configuration can cause an APD(All Path Down) situation where all the VMs on that site will be down and might require a manual intervention if APD/PLD advanced parameters are not setup in vSphere. The use of a Non-Uniform VMware vMSC will not require as large of pipe between the two sites storage and allow for a bit larger latency 5ms RTT rather than 1ms, but it makes APD/PDL situations more probable and you will need to be prepared to response to them. For more information about APD/PDL you might want to read the following post by Cormac Hogan which document both of them in a very detailed well written manner: http://cormachogan.com/2012/09/07/vsphere-5-1-storage-enhancements-part-4-all-paths-down-apd/

Another thing to take care of in vSphere Stretched cluster, is that VMware HA/DRS/SDRS are still not site aware out of the box, & you should tweak them a bit to simulate site awareness. The below recommendations will help you achieve that

- Use Affinity Group to create site awareness (Use Should Rules for DRS). Make sure to group VMs with its preferred site, so in normal operation they will always run on their preferred site using local storage, where they only move to the DR site in case of failure on the site they are normally running on. Make sure to use the should rule rather than must rules when setting up your affinity group as you still want to allow the VMs to failover to the other site if there is a VMware HA event as the must rule will not allow such a behavior & will cause the VMs not to restart on the other site.

- Avoid across sites VMotion/SVMotion. While Affinity Groups will stop DRS from moving VMs to run on the non preferred site/storage nothing stop an admin from manually moving the VM using VMotion/SVMotion to run on the non preferred site. Make sure this is well documented in your operational/change management procedures to stop admins from falling to this mistake. The reason that you want your VMs to always stick to its preferred site is that in case of a split situation between the two sites, VMs which is running on the non preferred site can lose access to storage and cause APD/PDL situation in addition to the downtime that will be encountered by those VMs.

- Setup Storage DRS to Manual. As you don’t want Storage DRs to move your VMs to a LUN running on the non preferred storage. Further each time a VM is moved using storage VMotion, it has to be replicated again to the DR site which get those VMs to be in a non protected state by the stretched cluster till the replication is completed. Further, if many of those Storage VMotion events take place at once it can overload the Intra-site links, which is why you want to schedule those moves for non peak hours and carry the recommendations manually if needed.

-

Co-locate Multi-VM applications. VMs which have dependencies on each other & have a lot of network traffic between them should be configured to stick together on the same site to avoid wasting your expensive WAN links bandwidth.

-

Set HA Admission control to 50% CPU/Memory. As HA is not site aware, you want to make sure it is keeping enough resources on each side to handle failing over all the VMs on the other site in case of a full site failure. This can be achieved by setting up HA Admission control to 50% for both CPU/Memory. Many customers get surprised by this recommendation, where its an obvious requirement in my opinion so please make sure to size your stretched cluster accordingly.

-

Use four heartbeat datastores (2 at each site). By default vSphere HA utilize two heartbeat datastores, which is more than enough when all the HA Cluster hosts sit on a single site. Though in a vSphere Stretched Cluster, you want to make sure you setup two heartbeat datastores per site so each site hosts can still reach their two heartbeat datastores in case of connectivity loss between sites. In order to setup HA to use four heartbeat datastore, you will need to setup the vSphere advanced parameter das.heartBeatDsperHost to 4 then in the vSphere Cluster wizard you will need to choose the 4 datastores to use. Make sure you choose two at each side.

- Specify two isolation addresses one in each site. Make sure you specify two HA Isolation Addresses one at each site, using the advanced setting “das.isolationaddress”. The reason you need this in case a connectivity loss between the two sites occur, each site will still be able to reach its HA Isolation Address and will not invoke an HA isolation response which could cause all the VMs in a particular site to shutdown/power off depending on your isolation response configuration.

Make sure to use HA Restart priorities. HA does not respect vApp restart priorities to prioritize VMs failover. Make sure to use HA Restart priorities instead to ensure that your most critical VMs get started first. The below sequence show the order VMware HA start VMs in:

1.Agent virtual machines

2.FT secondary virtual machines

3.Virtual Machines configured with a restart priority of high

4.Virtual Machines configured with a medium restart priority

5.Virtual Machines configured with a low restart priority

This is no guarantee that HA will be able to restart all of your VMs. The way it works HA will attempt to restart failed VMs, but if a VM fail to start HA will continue its attempts to start the next VM even if the first one which could have a higher priority fail to start. For this reason you might want to have a good process in place that check if all of your VMs/Services have been successfully restored after a site failure.

Don’t host vCenter/Witness server within your stretched cluster. To maintain manageability and visibility into your environment in case of a site or VPLEX failure you want to host your vCenter and Witness server on a separate infrastructure that is not powered by VPLEX and preferably running on a third site.

Make sure to test all the failover scenarios, specially the ones that require manual intervention and learn how to response to them. Each hardware vendor has their own testing scenarios document and how to respond to them in a vSphere Stretched Cluster. Make sure you request that and ensure that your testing of each failure scenario match the expected result and you are ready to respond to it specially the ones where manual intervention is required.

Try to automate tasks when possible (vCenter Orchestrator!). When things fail and you need to respond manually you have more chances for error specially when panicking than if you have already automated the process and well tested it before the failure where you will only need to watch the execution of your well tested automated recovery process rather than scratching your head how you were able to bring the setup back online last time.

vSphere Metro Storage Cluster (vMSC) is a new storage configuration for VMware vSphere environments and recognized with a unique Hardware Compatibility List (HCL) classification. All supported vMSC storage devices available on the VMware Storage Compatibility Guide. Make sure the storage/setup you are planning to use is on that HCL list.

At last, I hope many of you find this info useful & if you have access to the VMworld Online sessions then I would highly recommend watching the following session. I have attended it life at VMworld Barcelona and it was a fantastic session where many of the info posted here I learned it at that session:

VMworld 2012 Session: INF-BCO1159 – Architecting and Operating a VMware vSphere Metro Storage Cluster by Duncan Epping & Lee Dilworth

As usual please leave your comments, thoughts, & suggestion in the comments area below.

9 responses to “EMC VPLEX – vSphere 5.1 Stretched Cluster Best Practices”

Funny, the website you refer to borrowed all of that from a white paper I authored not too long ago:

http://www.yellow-bricks.com/2012/05/23/vsphere-metro-storage-cluster-white-paper-released/

Duncan Epping

Yellow-Bricks.com

Hi Duncan,

I am not sure what to tell you on this one. I have tried to give you credit where due as I got a lot of this from your VMworld presentation, but that blog does not even reference you.

I am not sure what I should do in this particular situation, as I have really got the info from that blog now he copied from you without giving credit do I need to reference the white paper instead or are you following up with that particular blogger to give you credit in his post. Let me know what do you think.

Thanks,

Eiad

[…] (EMC Tech Paper) EMC Powerpath/VE for VMware vSphere Best Practices planning (EMC Tech Paper) EMC VPLEX – vSphere 5.1 Stretched Cluster Best Practices (Virtualization Team) Implementing EMC Symmetrix Virtual Provisioning with VMware vSphere (VMware […]

Great blog right here! Additionally your website lots up very fast! What host are you the usage of? Can I get your associate link to your host? I desire my web site loaded up as fast as yours lol

a few typos that need to be corrected. else, very insightful! thanks ..

example :

Further each time a VM is moved using storage VMotion, it has to be replicated again to the DR site which get those VMs to be in a none protected state by the stretched cluster till the replication is completed.

You need to make it non-protected state instead of a none protected state

regards

Glad you find it insightful. I have went through and fixed the few typos you have pointed out :).

[…] (EMC Tech Paper) EMC Powerpath/VE for VMware vSphere Best Practices planning (EMC Tech Paper) EMC VPLEX – vSphere 5.1 Stretched Cluster Best Practices (Virtualization Team) Implementing EMC Symmetrix Virtual Provisioning with VMware vSphere (VMware […]

Hi,

Thanks for the article.

regarding the “Specify two isolation addresses one in each site” recommendation:

As far as i know the default isolation address is the default gateway, in that case each host will try to ping its own DGW, preventing an isolation response in case connectivity loss between the two sites occur.

can you give your opinion on that?

While having each of them has a single isolation address which its default gateway would work, adding the second gateway will give you more resiliency in case your local gateway address is down or just the IP of it is down.